Getting familiar with PyTorch data structures (codes included)

In this introduction to the concepts of Pytorch data structures, we will learn about how to create and reshape tensors using Pytorch and compare it with the Numpy data structures.

PyTorch is a popular Python library for deep learning. We will learn the basics of Pytorch and Pytorch tensor a, how to create it, and perform some operations.

A tensor can be thought of as a generalized matrix. So, we can have a 1-D, 2D, or N-dimensional matrix. We generally call them scalar (single number), vector (1-D array), matrix (2-D array), and then higher ones can be simply called a tensor.

If you have done some theoretical mathematics, then you must be aware that tensors are an easy expression of our dataset and we don’t need to design our equations around the dimensions. Similarly, in data science, if we use tensors then we can use our code for the single number, matrix, or complex data forms such as image data.

Import PyTorch and Numpy

Let us start by importing the libraries:

import torch

import numpy as np

print(torch.__version__)

1.1.0

The last command is to check the installation of PyTorch in the current environment.

Creating a tensor

Now, let us create a Numpy array to obtain the Tensor object.

myarr = np.arange(1,6)

print(myarr)

print(myarr.dtype)

print(type(myarr))

[1 2 3 4 5]

int64

<class 'numpy.ndarray'>

If we want to convert the Numpy array to tensor, we do

tt = torch.from_numpy(myarr)

print(tt)

print(tt.type())

tensor([1, 2, 3, 4, 5])

torch.LongTensor

This one will create a link between the original (myarr) and tt. The better way to create an array will be

tt = torch.tensor(myarr)

print(tt)

print(tt.type())

tensor([1, 2, 3, 4, 5])

torch.LongTensor

Now, let us create a 2D tensor:

my2darr = np.arange(0.,12.).reshape(4,3) #get a numpy array and reshape it to obtain 2d

print(my2darr)

tt2 = torch.tensor(my2darr)

print(tt2)

print(tt2.type())

[[ 0. 1. 2.]

[ 3. 4. 5.]

[ 6. 7. 8.]

[ 9. 10. 11.]]

tensor([[ 0., 1., 2.],

[ 3., 4., 5.],

[ 6., 7., 8.],

[ 9., 10., 11.]], dtype=torch.float64)

torch.DoubleTensor

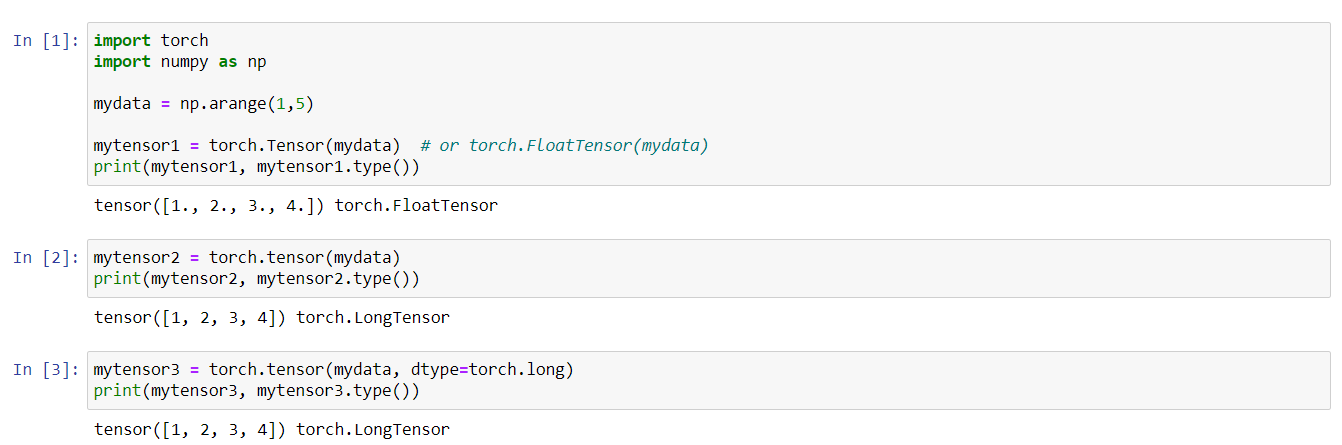

Three main ways of defining Tensor in PyTorch

mydata = np.arange(1,5)

mytensor1 = torch.Tensor(mydata) # Equivalent to cc = torch.FloatTensor(data)

print(mytensor1, mytensor1.type())

tensor([1., 2., 3., 4.]) torch.FloatTensor

mytensor2 = torch.tensor(mydata)

print(mytensor2, mytensor2.type())

tensor([1, 2, 3, 4]) torch.LongTensor

mytensor3 = torch.tensor(mydata, dtype=torch.long)

print(mytensor3, mytensor3.type())

tensor([1, 2, 3, 4]) torch.LongTensor

Common Numpy methods are available

## Random Numbers

x = torch.rand(4, 3)

print(x)

x = torch.randn(4, 3)

print(x)

x = torch.randint(0, 5, (4, 3))

print(x)

x = torch.empty(4, 3)

print(x)

## Initialization

x = torch.zeros(2,5)

print(x)

tensor([[0.2137, 0.1635, 0.0262],

[0.5090, 0.6493, 0.2037],

[0.3697, 0.9471, 0.7771],

[0.1593, 0.9452, 0.0312]])

tensor([[-0.8143, -2.0780, 0.5173],

[ 0.1504, -0.2943, 0.4080],

[ 1.0094, 1.9406, 0.5446],

[ 0.2792, -1.6270, -0.4240]])

tensor([[3, 1, 2],

[4, 1, 3],

[0, 1, 4],

[1, 4, 4]])

tensor([[7.1664e-10, 4.5630e-41, 4.4871e+20],

[3.0938e-41, 0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00],

[0.0000e+00, 1.8788e+31, 1.7220e+22]])

tensor([[0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0.]])

We can also define random number seed just like numpy:

torch.manual_seed(42)

x = torch.rand(2, 3)

print(x)

print(x.shape)

tensor([[0.8823, 0.9150, 0.3829],

[0.9593, 0.3904, 0.6009]])

PyTorch uses multiple devices

print(x.device) #'cpu' or 'cuda'

PyTorch is able to harness the power of GPUs in addition to the CPU. For details, check here.

PyTorch Object Memory Layout

x = torch.Tensor([[1, 2, 3, 4, 5], [6, 7, 8, 9, 10]])

print(x.layout) #strided as default

torch.strided

Currently, PyTorch support torch.strided (dense Tensors) and have beta support for torch.sparse_coo (sparse COO Tensors).

View or Reshape

x = torch.linspace(1,5, 10)

print(x)

print(x.view(2,5))

print(x)

print(x.reshape(2,5))

print(x)

tensor([1.0000, 1.4444, 1.8889, 2.3333, 2.7778, 3.2222, 3.6667, 4.1111, 4.5556,

5.0000])

tensor([[1.0000, 1.4444, 1.8889, 2.3333, 2.7778],

[3.2222, 3.6667, 4.1111, 4.5556, 5.0000]])

tensor([1.0000, 1.4444, 1.8889, 2.3333, 2.7778, 3.2222, 3.6667, 4.1111, 4.5556,

5.0000])

tensor([[1.0000, 1.4444, 1.8889, 2.3333, 2.7778],

[3.2222, 3.6667, 4.1111, 4.5556, 5.0000]])

tensor([1.0000, 1.4444, 1.8889, 2.3333, 2.7778, 3.2222, 3.6667, 4.1111, 4.5556,

5.0000])

View method do not changes the original tensor. This is similar to the reshape method. View method has been in PyTorch for a long time and reshape have been added recently.

The main thing we need to care about is whether we modify the original tensor. But we ensure that we alter the original by using the reassignment.

## With reshape

x = torch.linspace(1,5, 10)

print(x)

x = x.reshape(2,5)

print(x)

tensor([1.0000, 1.4444, 1.8889, 2.3333, 2.7778, 3.2222, 3.6667, 4.1111, 4.5556,

5.0000])

tensor([[1.0000, 1.4444, 1.8889, 2.3333, 2.7778],

[3.2222, 3.6667, 4.1111, 4.5556, 5.0000]])

## With view

x = torch.linspace(1,5, 10)

print(x)

x = x.view(2,5)

print(x)

tensor([1.0000, 1.4444, 1.8889, 2.3333, 2.7778, 3.2222, 3.6667, 4.1111, 4.5556,

5.0000])

tensor([[1.0000, 1.4444, 1.8889, 2.3333, 2.7778],

[3.2222, 3.6667, 4.1111, 4.5556, 5.0000]])

x = torch.linspace(1,5, 10)

print(x)

z = x.view(2,5)

x[0]=234

print(z) #z changed

print(x) #x changed

tensor([1.0000, 1.4444, 1.8889, 2.3333, 2.7778, 3.2222, 3.6667, 4.1111, 4.5556,

5.0000])

tensor([[234.0000, 1.4444, 1.8889, 2.3333, 2.7778],

[ 3.2222, 3.6667, 4.1111, 4.5556, 5.0000]])

tensor([234.0000, 1.4444, 1.8889, 2.3333, 2.7778, 3.2222, 3.6667,

4.1111, 4.5556, 5.0000])

From the above code, we can see that view (also reshape) does not generate a copy of the array but point to the original array. This can be a convenience or inconvenience depending on the usage.

Arithmetic with tensors

a = torch.tensor([1,2,3], dtype=torch.float)

b = torch.tensor([4,5,6], dtype=torch.float)

print(a + b)

print(torch.add(a, b))

tensor([5., 7., 9.])

tensor([5., 7., 9.])

We can also output the result into predefined tensor:

result = torch.empty(3)

torch.add(a, b, out=result)

print(result)

tensor([5., 7., 9.])

We can also compute the dot product using the dot method:

a = torch.tensor([1,2,3], dtype=torch.float)

b = torch.tensor([4,5,6], dtype=torch.float)

print(a, b)

print(a.mul(b))

print(a.dot(b))

tensor([1., 2., 3.]) tensor([4., 5., 6.])

tensor([ 4., 10., 18.])

tensor(32.)

Unlike numpy dot method, tThe Pytorch dot method only accepts the 1D array. For higher dimensional tensor array, we can do the matrix multiplication:

a = torch.tensor([[0,2,4],[1,3,5]], dtype=torch.float)

b = torch.tensor([[6,7],[8,9],[10,11]], dtype=torch.float)

print(a)

print(b)

print('a: ',a.shape)

print('b: ',b.shape)

print(torch.mm(a,b)) #also a @ b or a.mm(b)

print('a x b: ',torch.mm(a,b).shape)

tensor([[0., 2., 4.],

[1., 3., 5.]])

tensor([[ 6., 7.],

[ 8., 9.],

[10., 11.]])

a: torch.Size([2, 3])

b: torch.Size([3, 2])

tensor([[56., 62.],

[80., 89.]])

a x b: torch.Size([2, 2])

Disclaimer of liability

The information provided by the Earth Inversion is made available for educational purposes only.

Whilst we endeavor to keep the information up-to-date and correct. Earth Inversion makes no representations or warranties of any kind, express or implied about the completeness, accuracy, reliability, suitability or availability with respect to the website or the information, products, services or related graphics content on the website for any purpose.

UNDER NO CIRCUMSTANCE SHALL WE HAVE ANY LIABILITY TO YOU FOR ANY LOSS OR DAMAGE OF ANY KIND INCURRED AS A RESULT OF THE USE OF THE SITE OR RELIANCE ON ANY INFORMATION PROVIDED ON THE SITE. ANY RELIANCE YOU PLACED ON SUCH MATERIAL IS THEREFORE STRICTLY AT YOUR OWN RISK.